Indexing is a crucial aspect of search engine optimization (SEO), where search engines like Google crawl and analyze the content on your website to determine how it should appear in search results. However, there are situations where you might want to prevent certain pages from being indexed, such as when the content is outdated or irrelevant, or when you need to protect sensitive information. Blocking indexing might seem like a reasonable solution, but it’s important to understand the potential risks that come with it.

What is Indexing and Why Does it Matter?

At its core, indexing is the process by which search engines catalog the content on your site, making it discoverable when users search for related queries. When your pages are indexed, they are eligible to appear in search engine results, increasing your site’s visibility and attracting more traffic. But not all pages should be indexed, and there are cases where you might want to block certain pages from appearing in search results.

While it may seem like blocking indexing is a simple solution for managing your website’s visibility, it comes with its own set of challenges. Misuse or overuse of indexing-blocking tactics can harm your site’s SEO performance and reduce its overall effectiveness in attracting traffic.

What Does Blocking Indexing Mean?

Blocking indexing involves preventing search engines from crawling or indexing specific pages on your website. This can be done through several methods, including using a robots.txt file, meta tags like noindex, or HTTP headers. By blocking certain pages from being indexed, you aim to control which parts of your site appear in search engine results.

Why Block Indexing?

There are several legitimate reasons why website owners may want to block indexing:

- Privacy Concerns: You might have pages with sensitive information that shouldn’t be visible in search engine results.

- Avoiding Duplicate Content: If your website has multiple pages with similar content, blocking some of them from being indexed can help prevent SEO penalties for duplicate content.

- Reducing Low-Quality Pages: If some pages on your website are deemed low-value or don’t contribute positively to user experience or SEO, blocking their indexing can help maintain a cleaner site.

Potential Risks of Blocking Indexing

While blocking indexing can have its advantages, it also comes with significant risks that could negatively impact your site’s search engine performance. Here are some of the key risks to consider:

1. Loss of Search Visibility

One of the most immediate risks of blocking indexing is losing visibility in search results. Pages that are blocked from indexing won’t appear in search engine results, which can severely impact your traffic if important pages are mistakenly blocked. For example, blocking a high-converting landing page from being indexed could lead to a significant drop in traffic and sales.

2. Broken Internal Links

Blocking a page from indexing can lead to issues with internal linking. If there are links pointing to blocked pages, search engines might interpret those links as broken or irrelevant, which can disrupt your site’s link structure and hinder crawlers’ ability to navigate your site efficiently. This can reduce the overall SEO effectiveness of your website.

3. Inefficient Crawling

When you block too many pages, search engine crawlers may struggle to efficiently crawl your site. Search engines allocate a limited amount of resources to crawl a website, and if they’re forced to crawl a lot of blocked pages, they might not get around to the important pages you want to rank. This can negatively impact your site’s performance and SEO results.

4. Risk of Search Engine Penalties

Improperly using indexing-blocking techniques, like incorrectly applying the noindex tag or blocking important pages through robots.txt, can be interpreted as an attempt to manipulate rankings. If search engines detect this behavior, it could result in penalties that harm your site’s search engine ranking.

Best Practices for Blocking Indexing

To avoid the negative effects of blocking indexing, it’s essential to follow some best practices:

1. Use the Noindex Tag Wisely

Instead of completely blocking a page from indexing with a robots.txt file, consider using the noindex meta tag. This allows search engines to crawl the page but prevents it from appearing in search results. It’s a more efficient approach that doesn’t disrupt the structure of your site.

2. Conduct Regular Audits

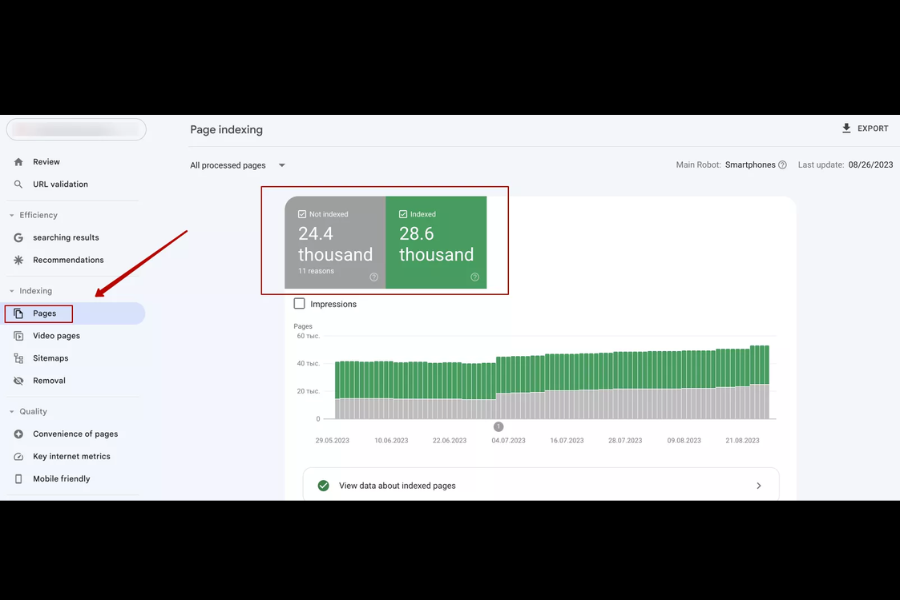

Regularly audit the pages you’ve blocked from indexing to ensure you’re not unintentionally blocking important content. Tools like Google Search Console can help you track which pages are being indexed and alert you to any problems.

3. Prioritize Key Pages

Make sure your most important pages—such as those that drive conversions or contain critical content—are accessible to search engines. These pages should never be blocked from indexing unless there’s a very good reason.

4. Consider SEO Goals When Blocking Indexing

Always evaluate your decision to block indexing against your broader SEO objectives. Blocking indexing should never be done impulsively. Instead, it should be a carefully thought-out strategy that aligns with your site’s long-term goals.

Summary:

Blocking risks in indexing involves controlling which pages appear in search engine results to safeguard privacy, avoid duplicate content, and optimize SEO performance. While blocking certain pages can be beneficial, it risks reducing search visibility, causing broken internal links, and leading to inefficient crawling. Implementing best practices such as using the noindex tag wisely, prioritizing key pages, and aligning decisions with SEO goals ensures an effective strategy that minimizes negative consequences.

FAQs:

- What does blocking indexing mean?

Blocking indexing refers to preventing search engines from crawling or displaying specific pages in search results. - Why should you block indexing?

To protect sensitive information, avoid duplicate content, reduce low-quality pages, or control which parts of your site appear in search results. - What are the risks of blocking indexing?

Risks include loss of search visibility, broken internal links, inefficient crawling, and potential search engine penalties. - How can you block a page from indexing?

Pages can be blocked using methods like robots.txt files, noindex meta tags, or HTTP headers. - How do you avoid SEO penalties when blocking indexing?

Use indexing-blocking techniques correctly, conduct regular audits, and ensure alignment with your SEO objectives.

Facts:

- Indexing is the process by which search engines catalog web content for search results.

- Blocking indexing can be achieved through tools like robots.txt files and noindex meta tags.

- Incorrectly blocking pages can reduce a site’s visibility and harm SEO performance.

- Regular audits with tools like Google Search Console can help monitor indexing activity.

- Blocking critical pages by mistake may lead to significant drops in traffic and conversions.

Explore trending manga and discover new favorites on Vvyvymanga.co.uk.